Critical factors in generating high quality BIM data

Architects, engineers, and contractors have been working with data for years. What is new is the many ways that the industry has to capture, apply, and tie the data together from the design of a facility all the way to the management and maintenance of it.

This new reality imposes new rigour. The move away from creating drawings to creating robust data that also generates drawings, is subtle, but with enormous downstream consequences.

Organizations that are effectively connecting objects in the project information model to the asset information model delivered post-completion, have come to realize that this not something that can be added on top of their existing practices. It needs to be integrated into their ongoing processes and workflows.

This step of integrating good data practices into existing workflows is often missed. It is a key reason for the creation of bad BIM data. Where does it all go wrong?

Not knowing where you are going

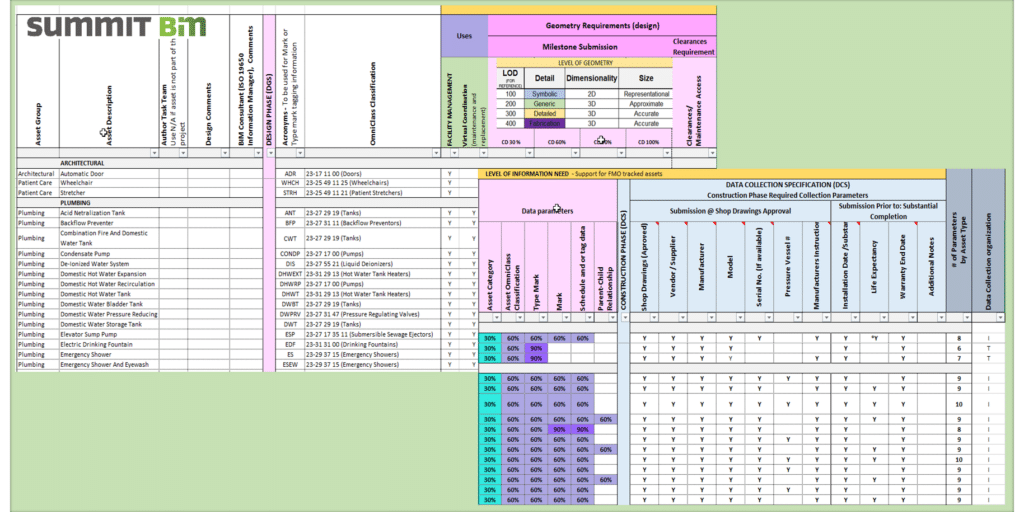

There is a wide variability in BIM standards. Often, they are linked to a particular owner, limited to deliverable specifications, or don’t look beyond the design process. Management teams need to understand how the data will be used and what their concomitant BIM strategy and processes should be to support that use.

Why do we need this level of thoroughness?

The deliverable is no longer a 2D document set. It is a database. While you can get away with entering data into inconsistent locations and using inconsistent naming conventions when the output is printed, this approach falls down when, for example, you want to start generating schedules and reports from the model. In the long run, taking the time to establish your organization’s BIM processes and workflows, is faster and results in a smoother process.

Believing that the software will do all the work

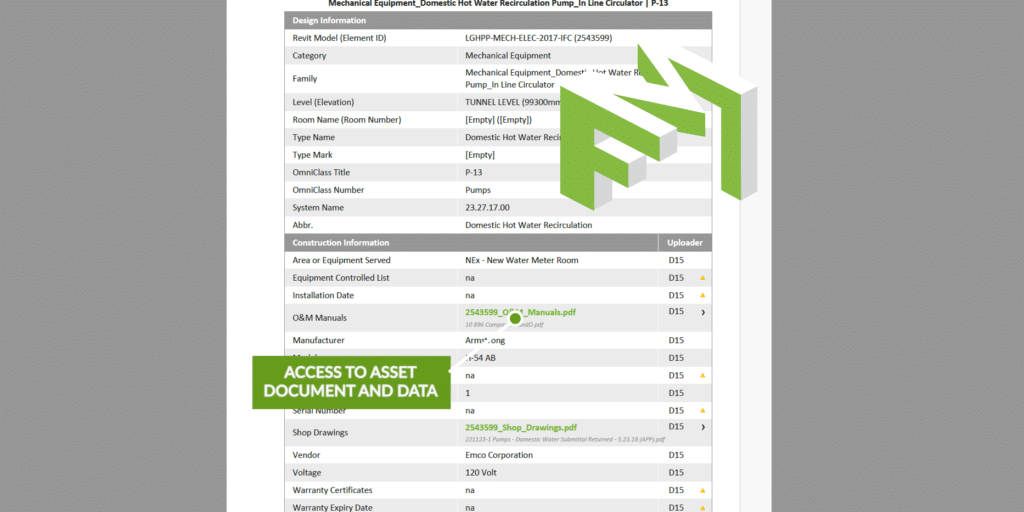

A common misconception is that the use of a “BIM software” when modelling, automatically creates BIM ready datasets. This is not necessarily true. Data entry is what creates the datasets. The data must be organised in such a manner, that the software is able to disseminate and export it into meaningful information. Early errors compound as you move through the process and degrade the ‘golden thread’ of information about the building.

Following bad data practices

Bad data can be considered under four simple groupings:

1. Duplication: Data is entered multiple times

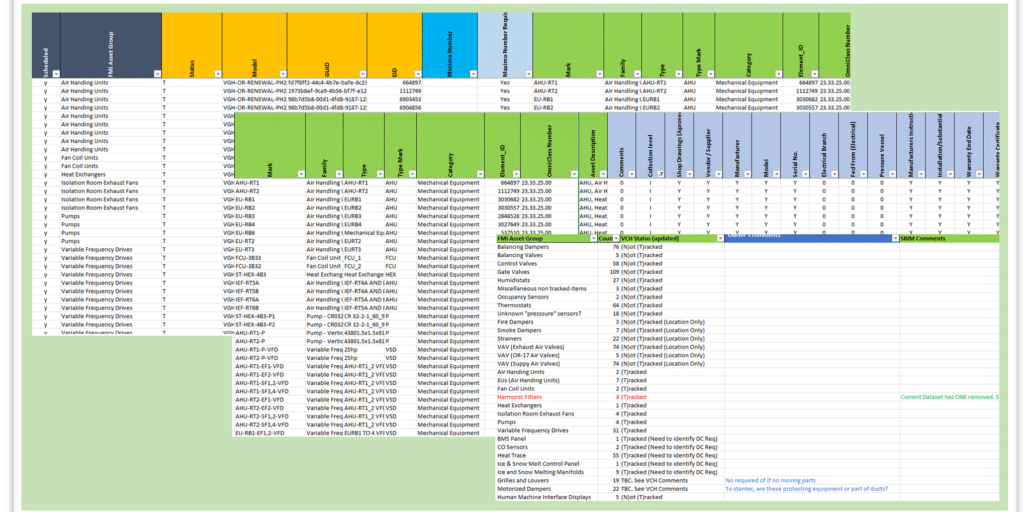

Following a drafting mentality, each team generates the information that they have historically represented in a drawing or CAD workflow. This approach results in multiple entries for the same asset.

- Architectural and electrical consultants placing light fixtures, or architectural and plumbing consultants placing bathroom fixtures generates duplication, which is to be trusted as right and to be used.

- Information by design consultant providing conflicting information, e.g. ‘Phase’ = existing, ‘comments field’ = new.

- Multiple parameters being used as possible tag containers, which makes it difficult to distinguish which information is the right one, e.g. ‘Mark’ = AHU-01, ‘Comments’ = AHU-03.

2. Missing: Not provided

Information that is required to support downstream uses by Facility Maintenance and Operations is not generated or generated in a manner that prevents the data from being extracted for other uses.

- Naming convention that support the parent / child relationship between assets are not established or not followed.

- Symbols or 2D lines are used to represent a real tracked asset.

- Room information is not placed correctly so assets do not reflect the room they are associated to.

3. Inaccurate: Scattered, mismatched data

Modelling software provides an exceptional framework for the creation of data during the design phase. As the design data is appended to the model, by multiple project team members who may each apply their own creative spin to the input, the perfect conditions exist to create data issues.

Without well-defined standards, mismatched and scattered data abounds. We often see:

- Data entered in different slots than those that the software generated.

- Lack of naming conventions leads to non-descriptive names, sorting and classification difficulties.

- Tags located in multiple locations leads to confusion and errors.

- Useless data like company initials are appended to data.

- Naming conventions guidelines are ‘light’ and therefore, not specific enough to provide information of any value.

4. Incorrect: Disconnected data and human error

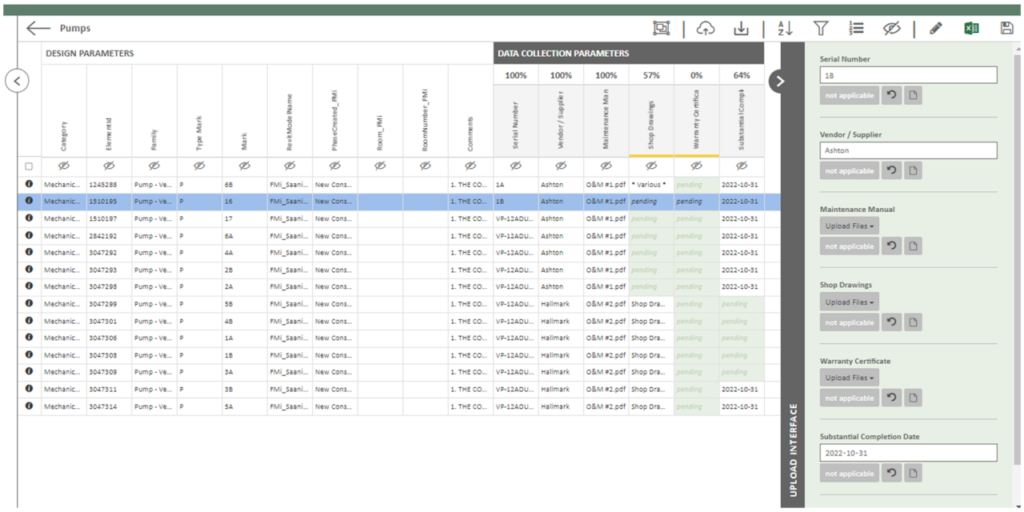

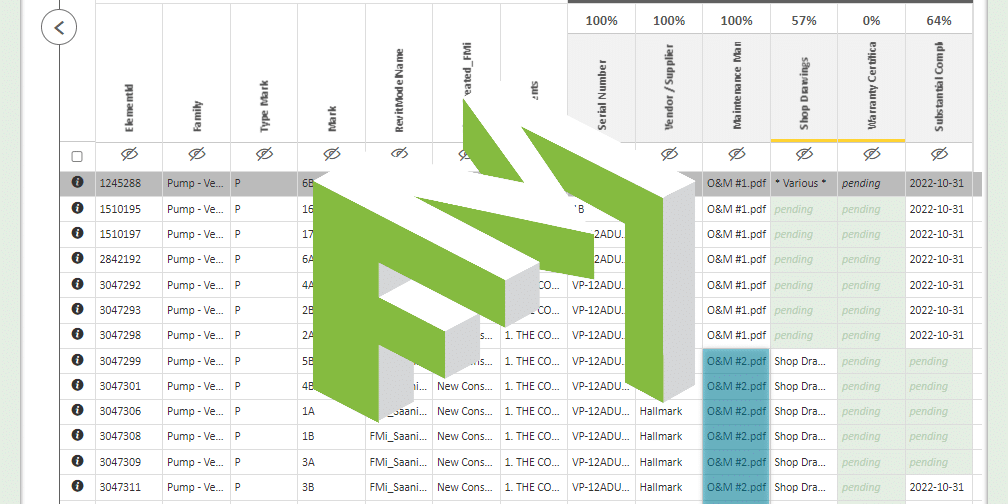

The unique information gathered about an asset during the construction phase is critical for the ongoing, efficient management and maintenance of a facility. Yet, specialist contractors and installers remain heavily reliant on analog PDFs and printed files, which creates a disconnect from the data in the project information model. Basic data such as make, model, serial number, and warranty information, as well as more technical data is then, often, manually extracted from the documents. This leads to the most basic and common kind of bad data issue – dependable old human error.

The generation of project schedules, particularly for Mechanical and Electrical assets, from an external Excel source rather than from the inherent model data. This leads to disconnected data which has no relation to the model asset.

Conclusion

Data is a strategic asset that drives smart decision making, cost planning, risk reduction, and safety improvements. According to Statistics Canada, “In 2018, Canadian investment in data, databases and data science was estimated to be as high as $40 billion.” which they claim is “higher than annual investment in industrial machinery, transportation equipment, and research and development”.

With BIM adoption gaining more and more traction in Canada, it is important for the industry, as a whole, to understand the need for accurate and reliable data collection methods. As datasets grow ever larger, processing it moves beyond the accuracy of mere mortals and into the realm of software algorithms that need good source data to shine.

Related Posts

Data Collection through Construction

Coming to Canada: A BIM Consultant’s Journey

BIM and the Art of an Asset Registry

Digital Handover – a less stressful solution

DGS/DCS Evolution – A Retrospective

Data Visualization and Collection for FM Handover

Data and Document Collection for FM Handover